Tokenization

ChatGPT for the ML non-technical

(Lots of over simplifications)

Why?

You need to know the nature of the tool you are given, to use it wisely.

Will I be replaced by an AI?

No, but you might be replaced by someone else using an AI, if you aren’t.

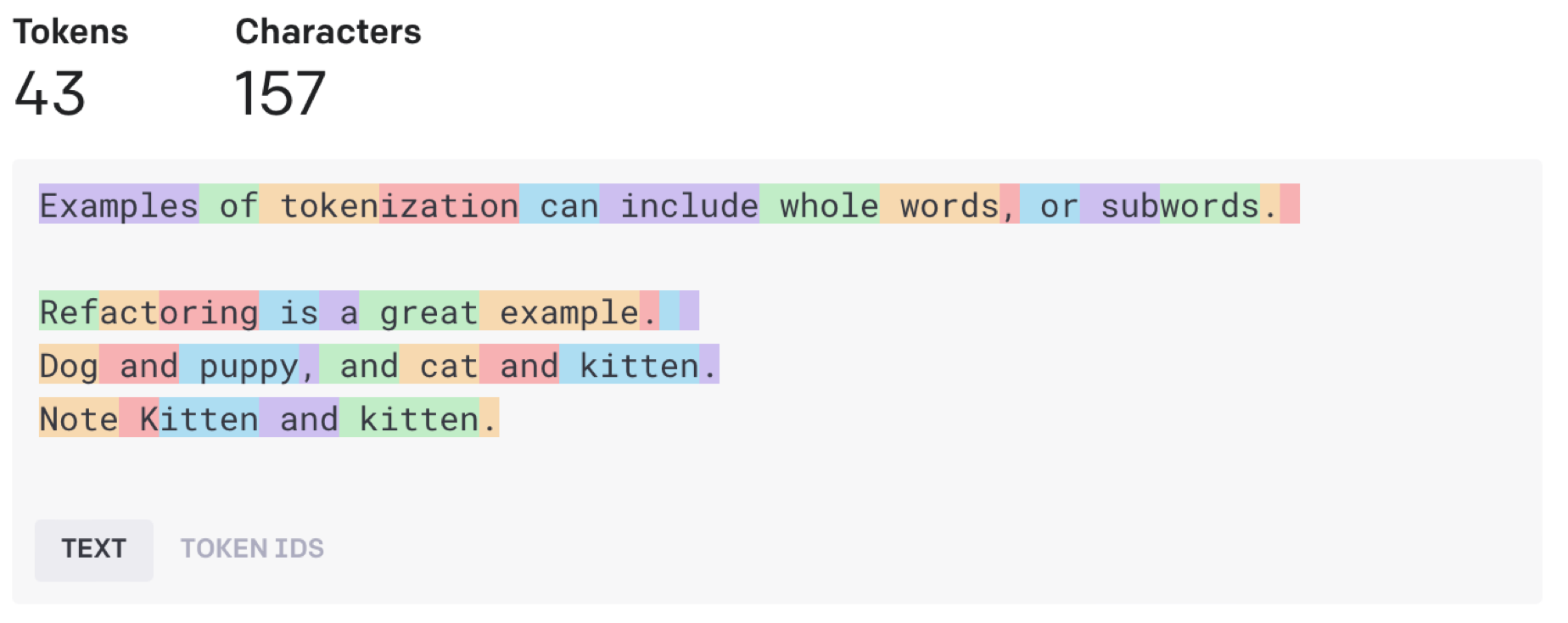

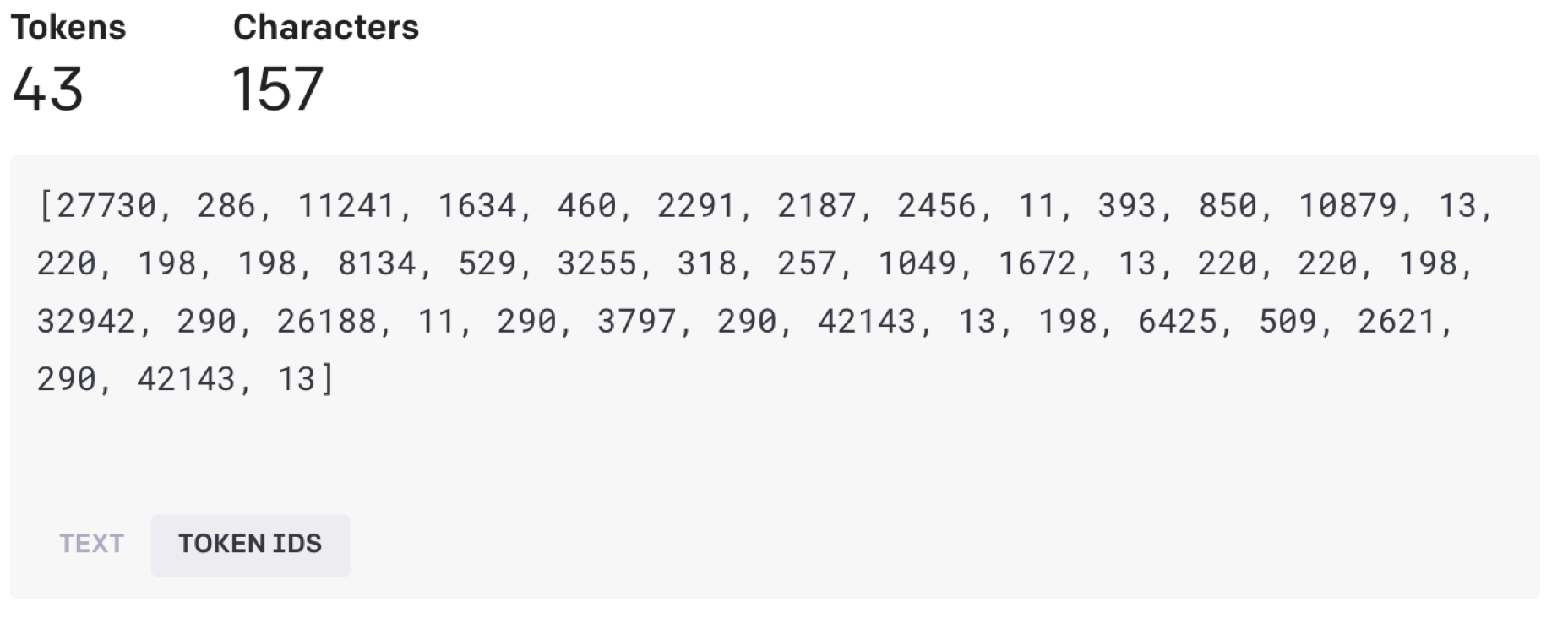

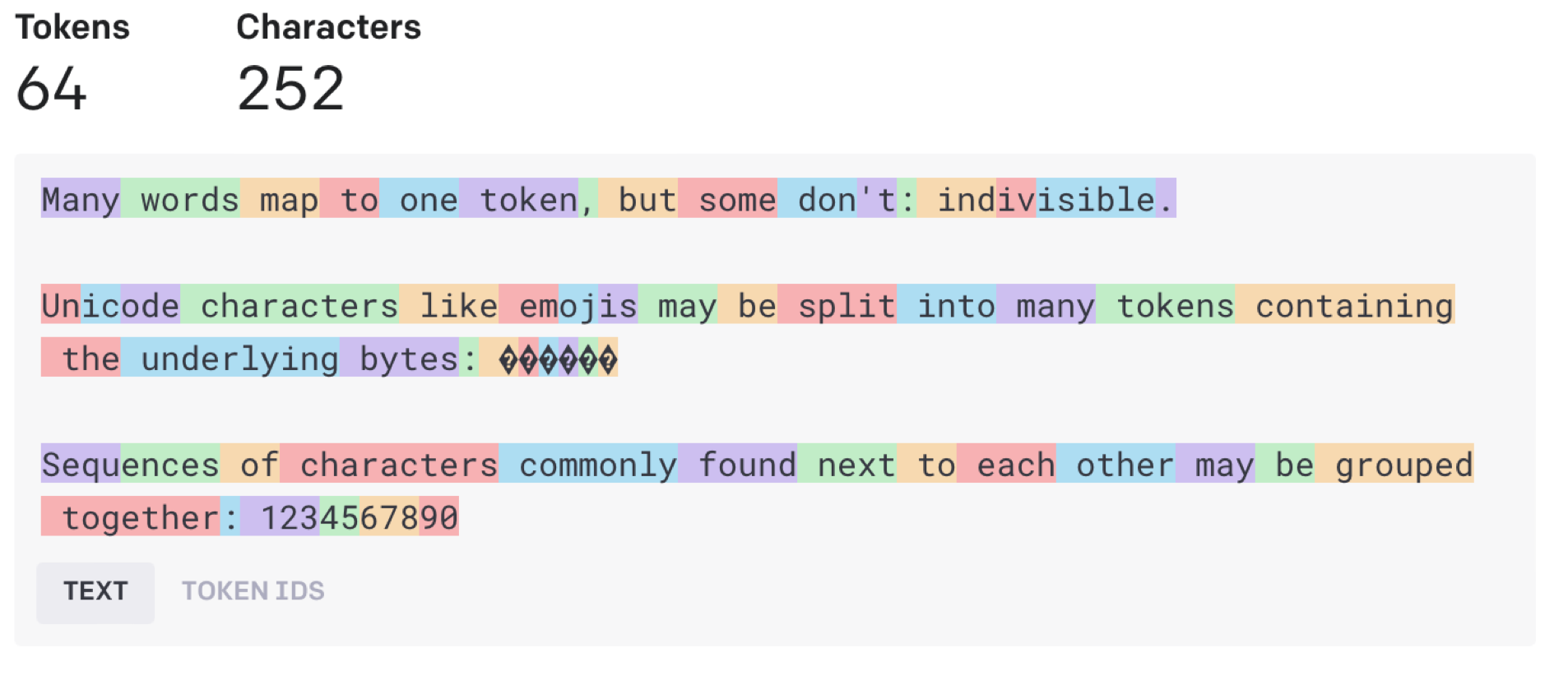

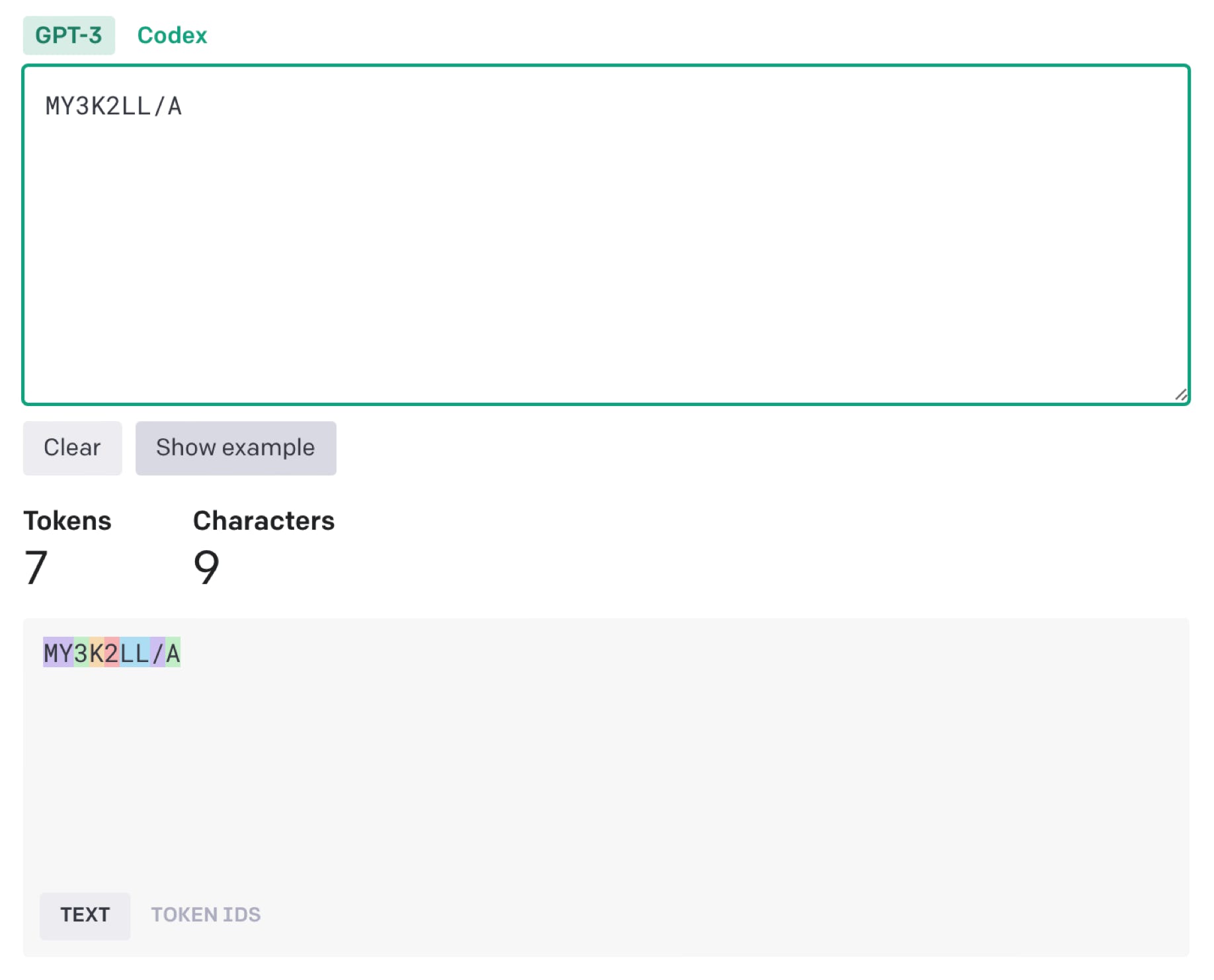

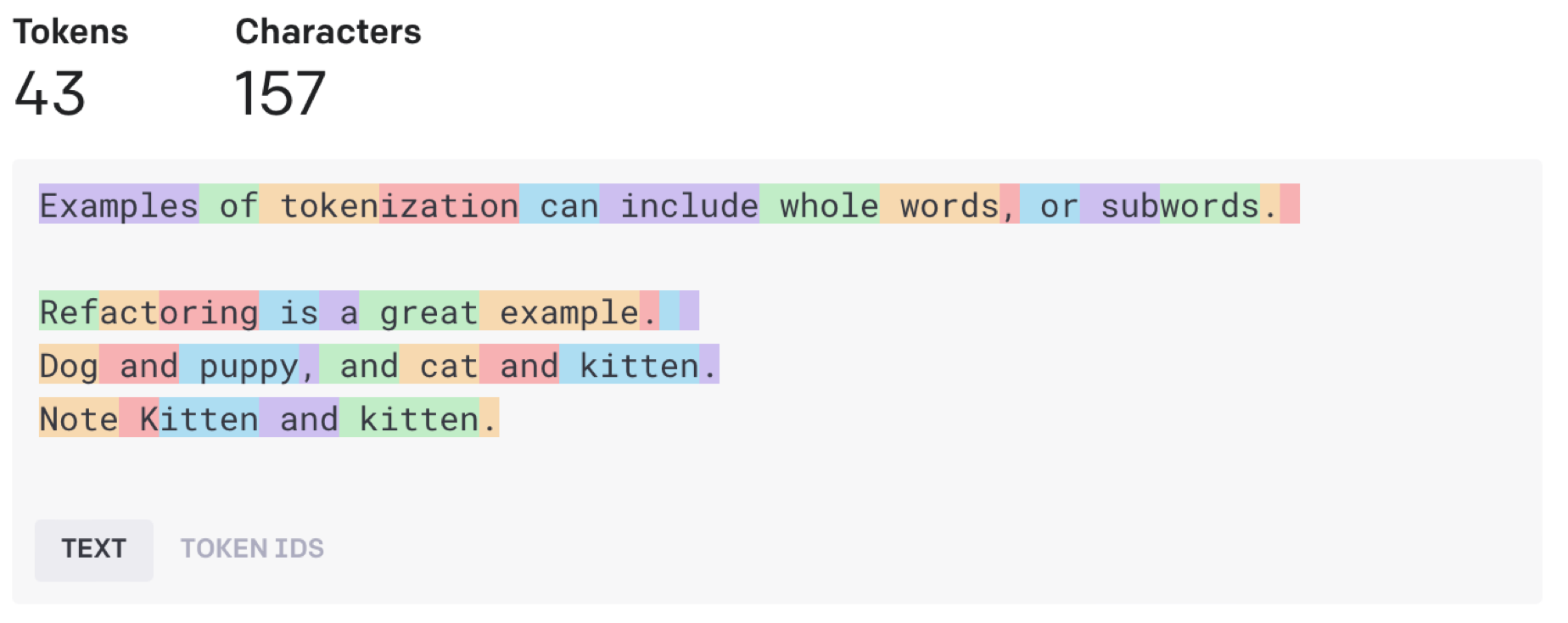

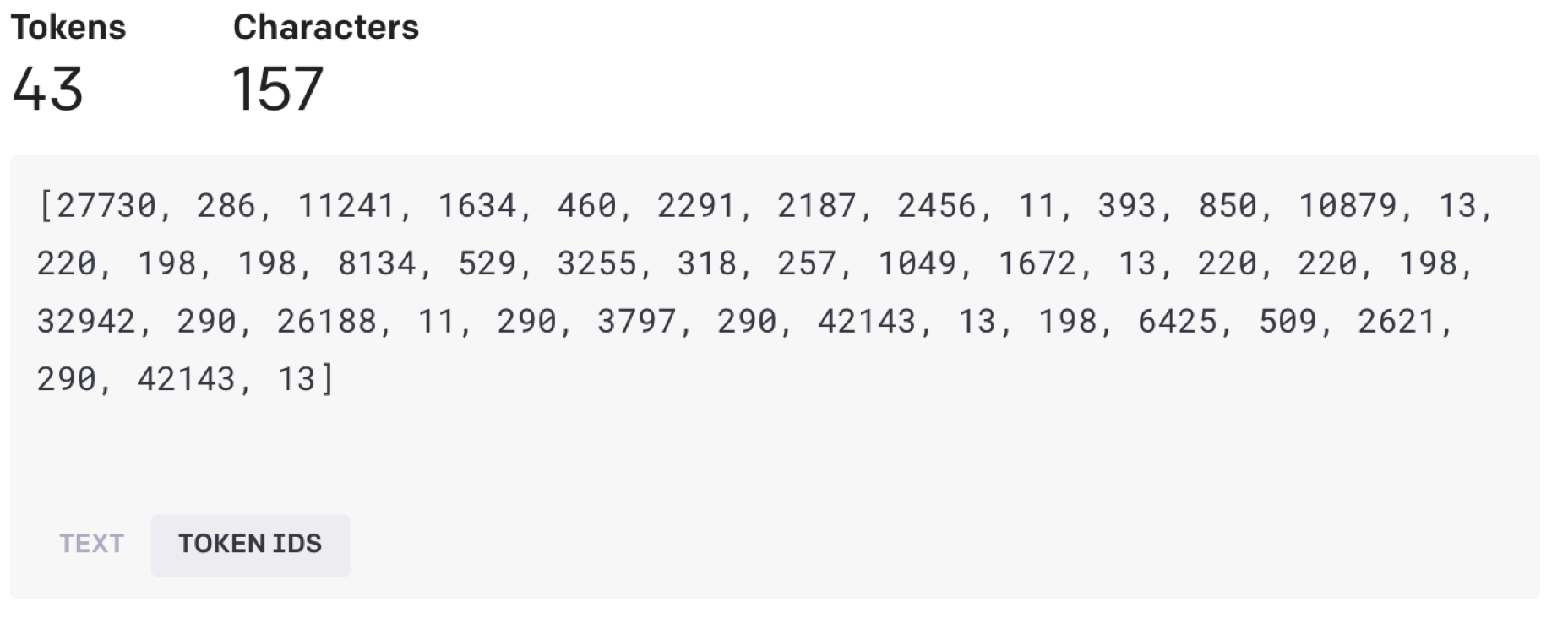

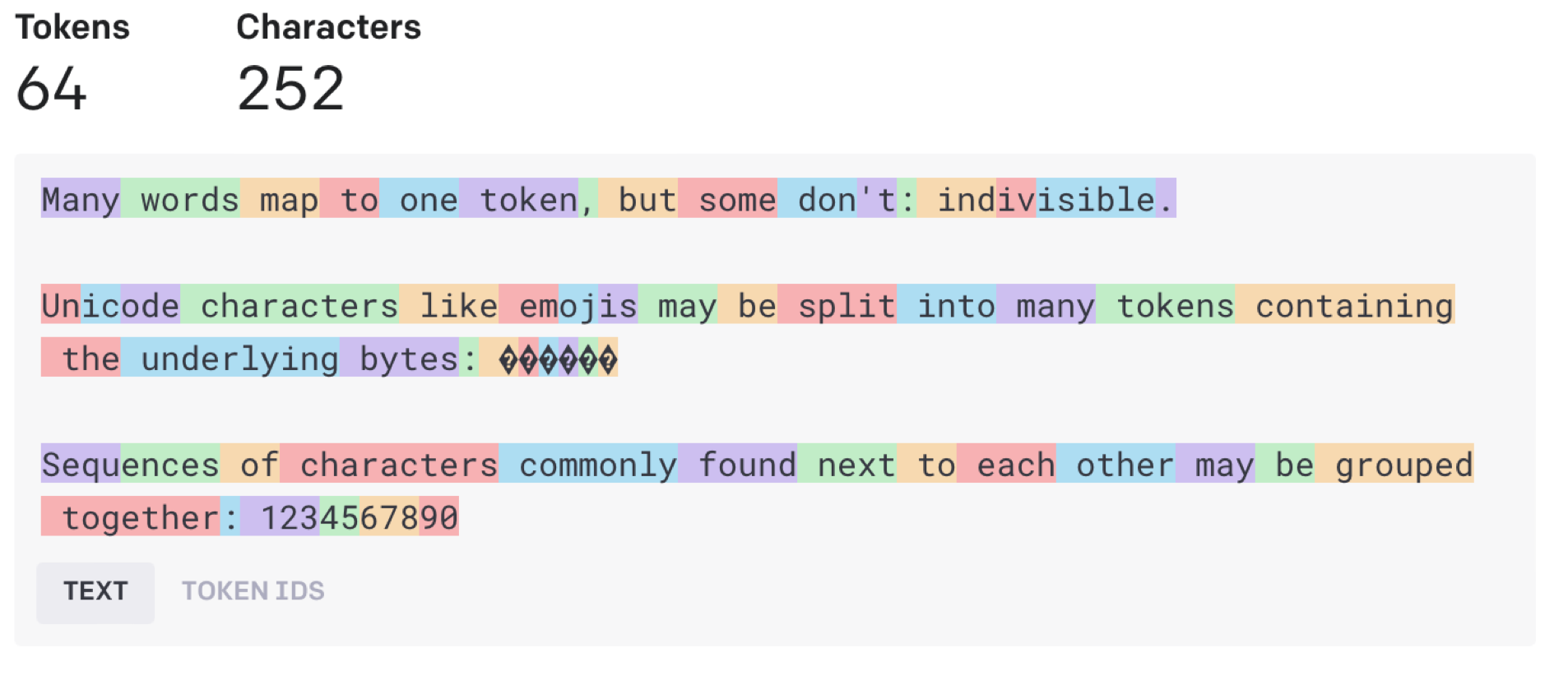

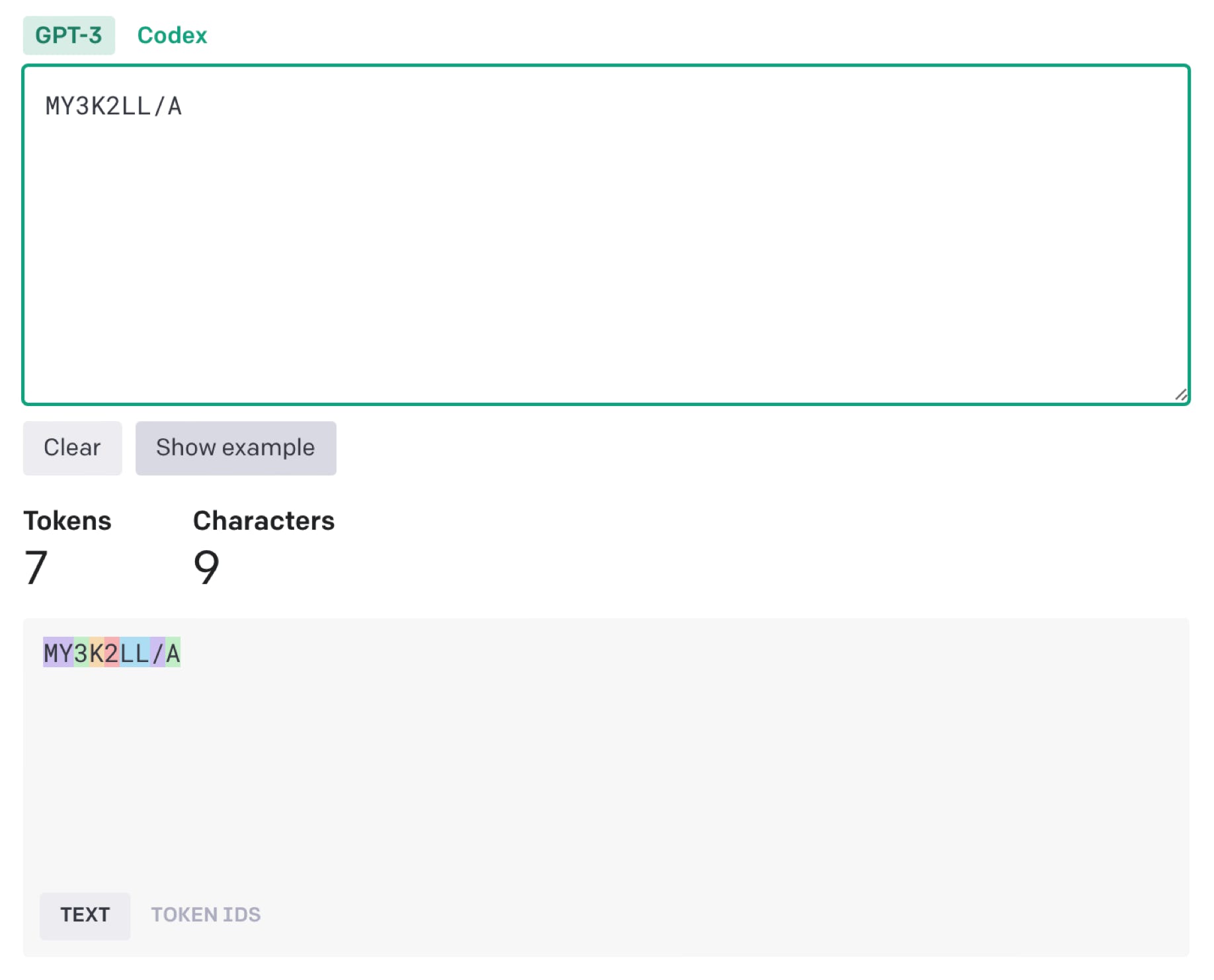

Tokenization

Tokens to Vectors (aka Embeddings)

Vectorization turns a token into a 50 (for ChatGPT 3.5) dimensional vector, with each dimensional value being a real number between -1 and 1. We have difficultly picturing anything beyond about 2 or 3 dimensions. Examples could be by meaning, grammar, context, etc.

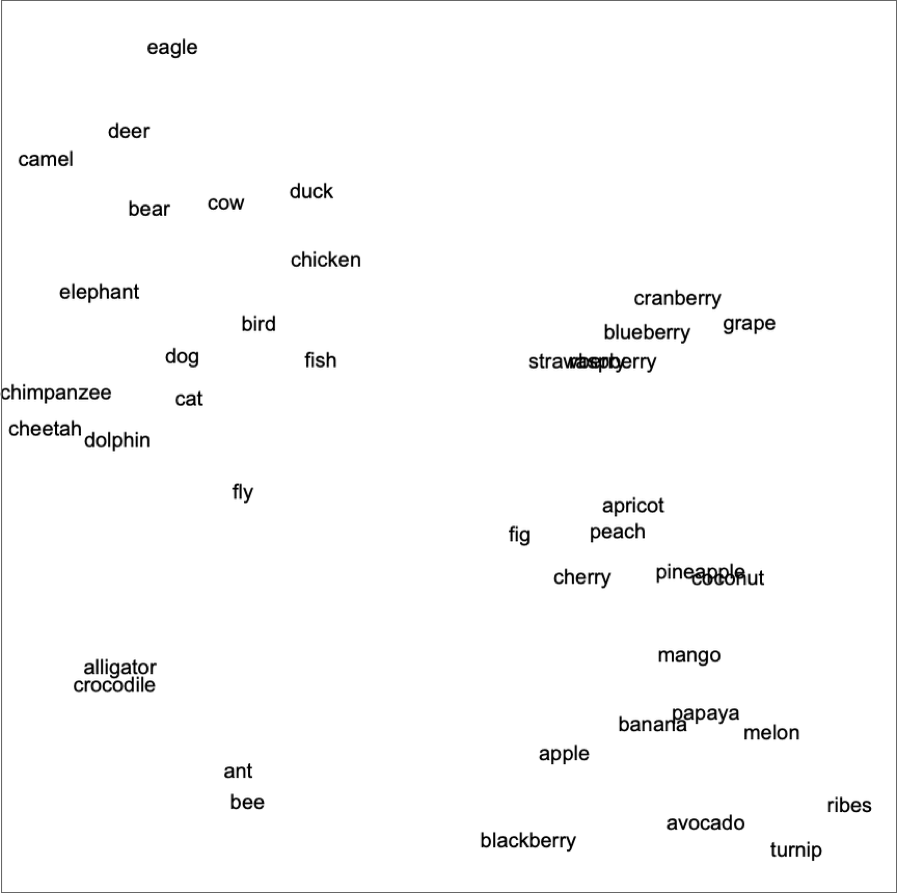

Common objects

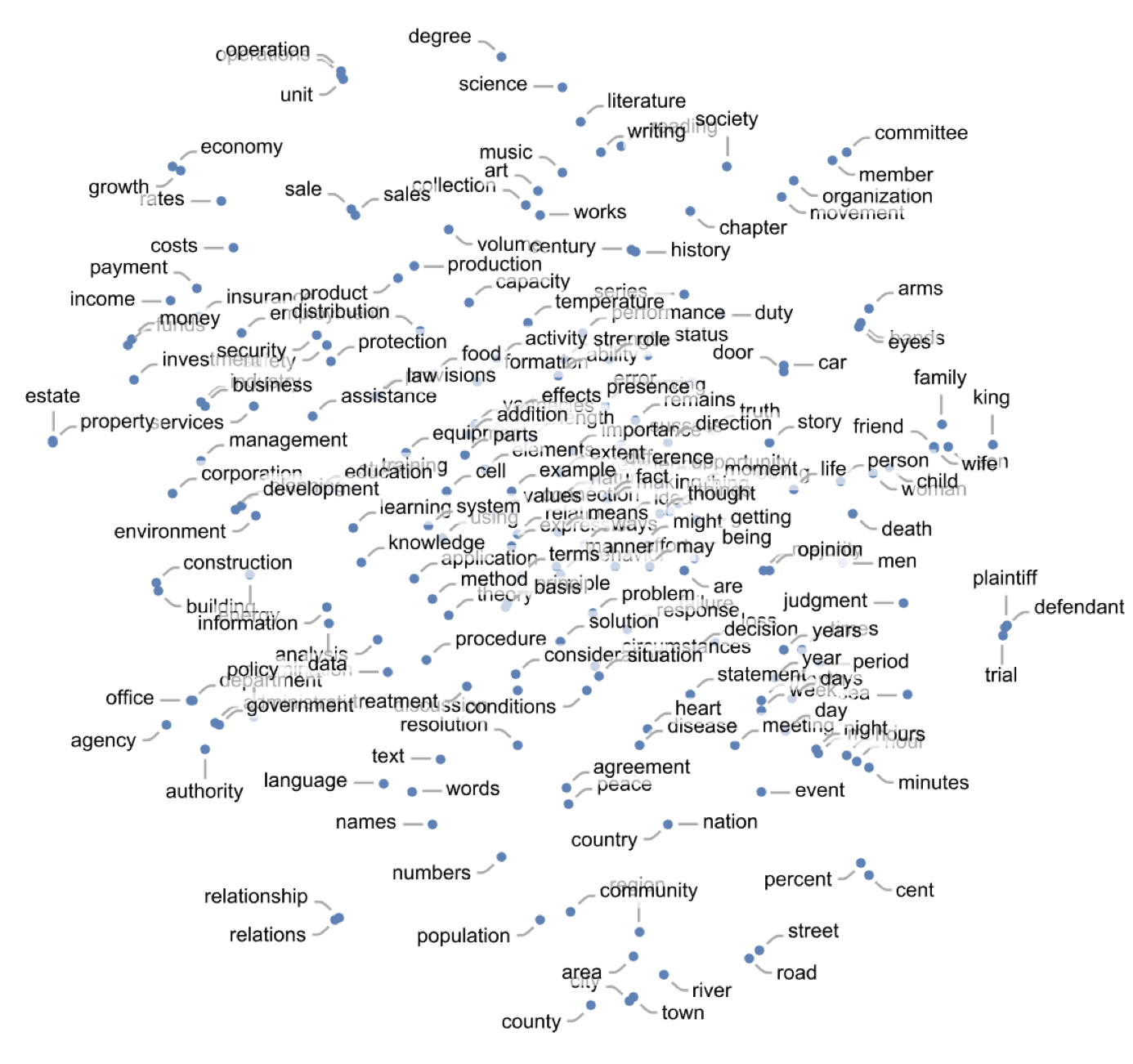

Topical Context

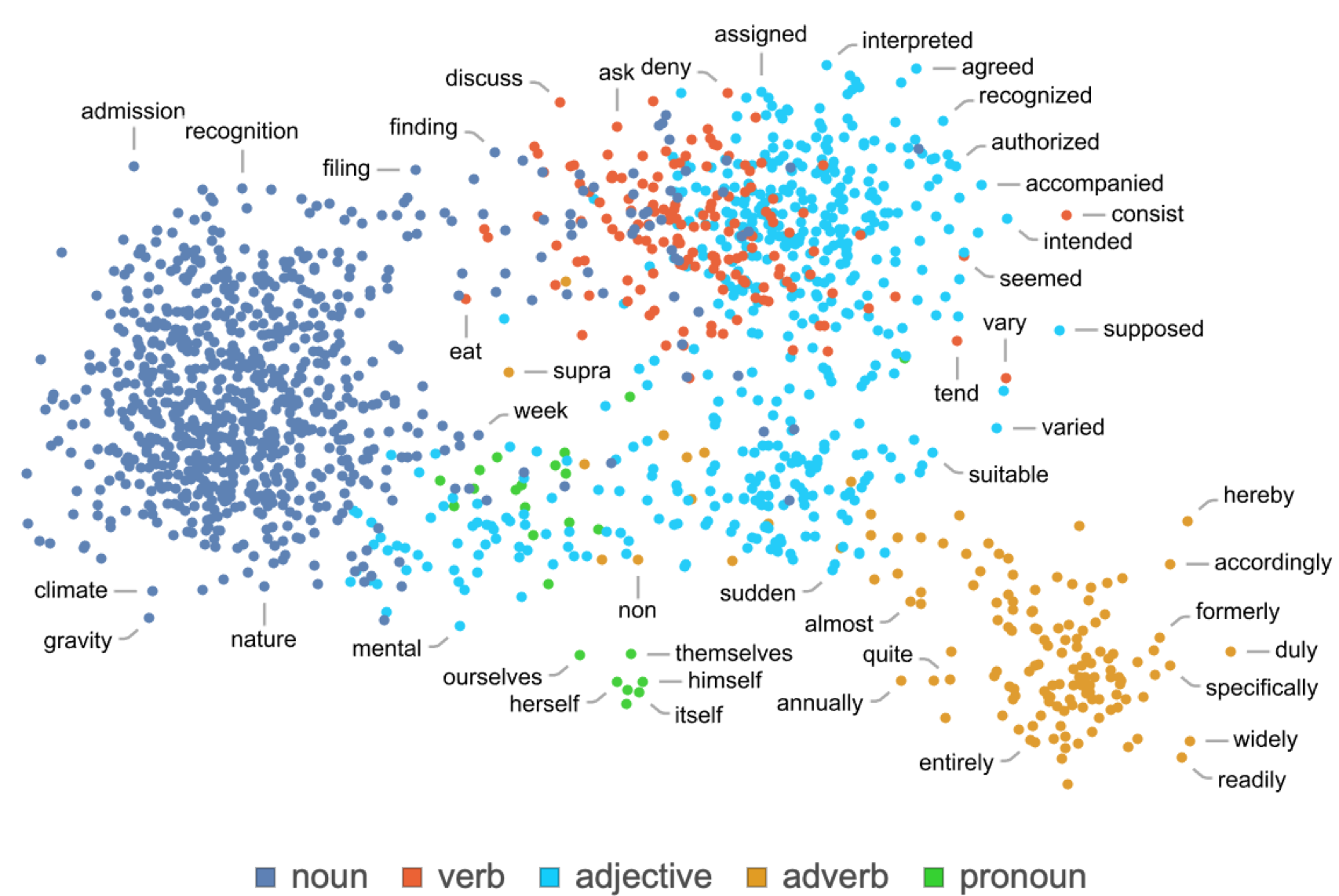

Grammar Based

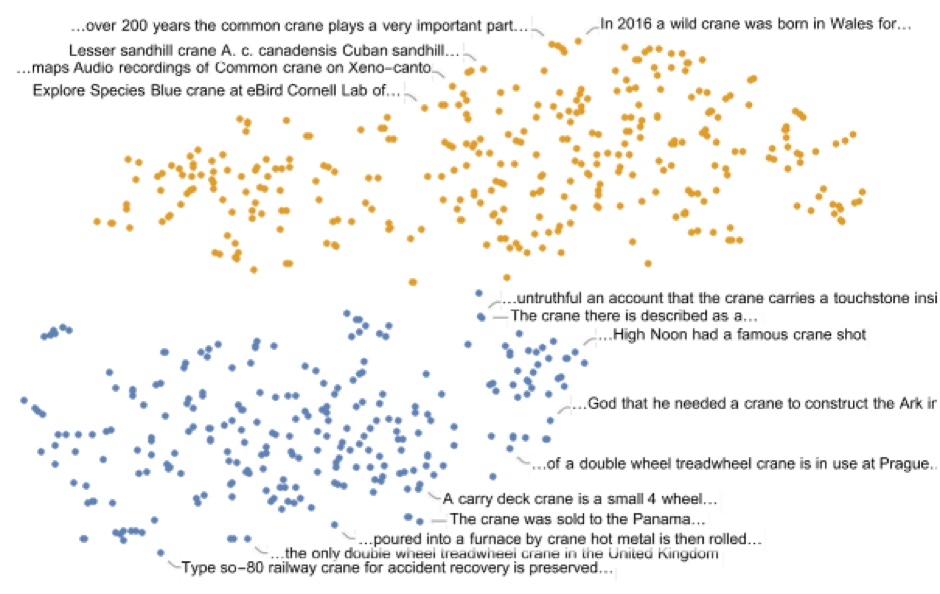

Contextual Usage

Yikes, Math!!!

But why?

These vectors perform much better when they are organized in some way.

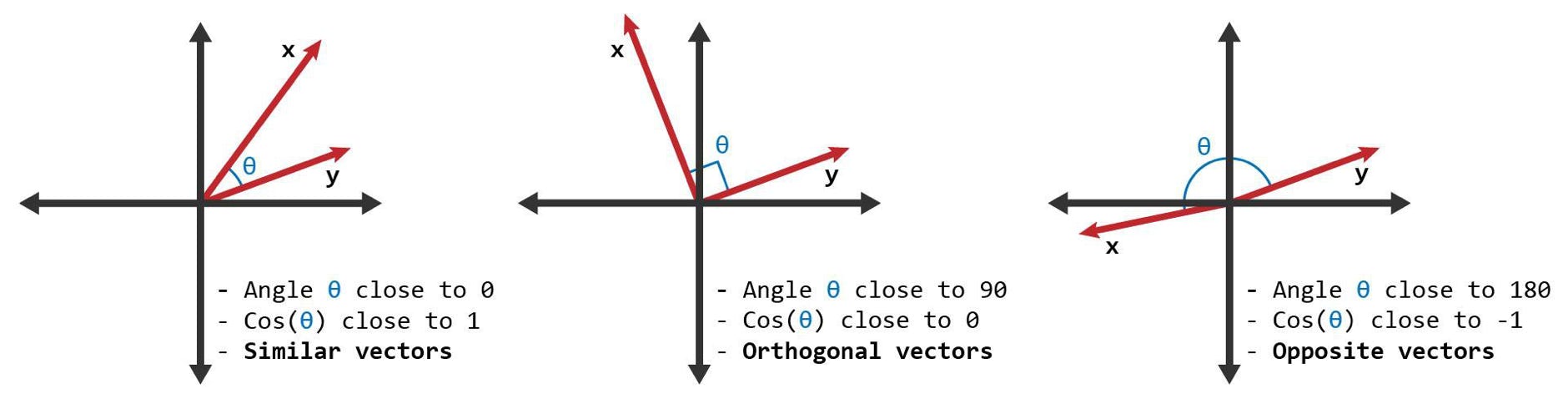

Because this allows a vector dot-product to determine commonality and assist in generalizations.

What’s a dot product? Cosine θ of course!

Dog & Husky

Cat & Yellow

Good and Evil

Neural networks

The prompt and predictive loop

Training

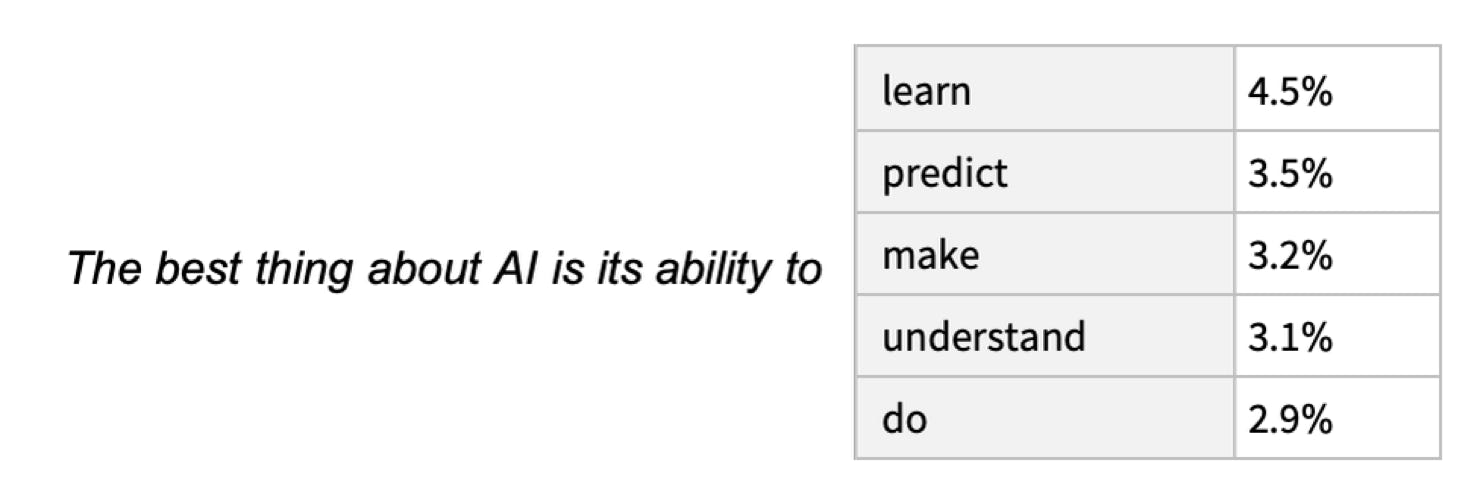

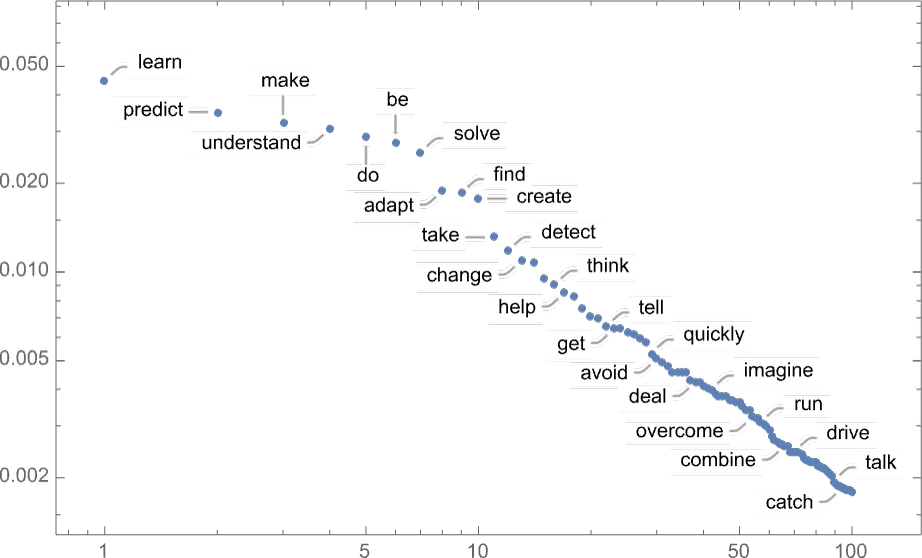

1) Feed it lots of text. The more the better.

Feed it everything (literally)

If it predicts the next word, Good AI, if not, Bad AI

Microsoft allowed all of Github to be ingested (along with Stack Overflow and others), and now it can code.

2) Reinforcement learning

It can actually help to re-feed it the same information

3) Human Grading RLHF (slow and expensive), but helps provide “alignment”.

Humans grade the AI’s behavior and responses

4) Build an AI (or many) that simulates a humans desired result

Difficulty (impossibility?) of defining a “goal”

When you are talking to it, it is not learning nor remembering beyond the current context limits (4k tokens). It does have a developer mode where you can train it new things, but in most user interaction, that is kept off. As currently licensed, ChatGPT 3.5 is not trainable.

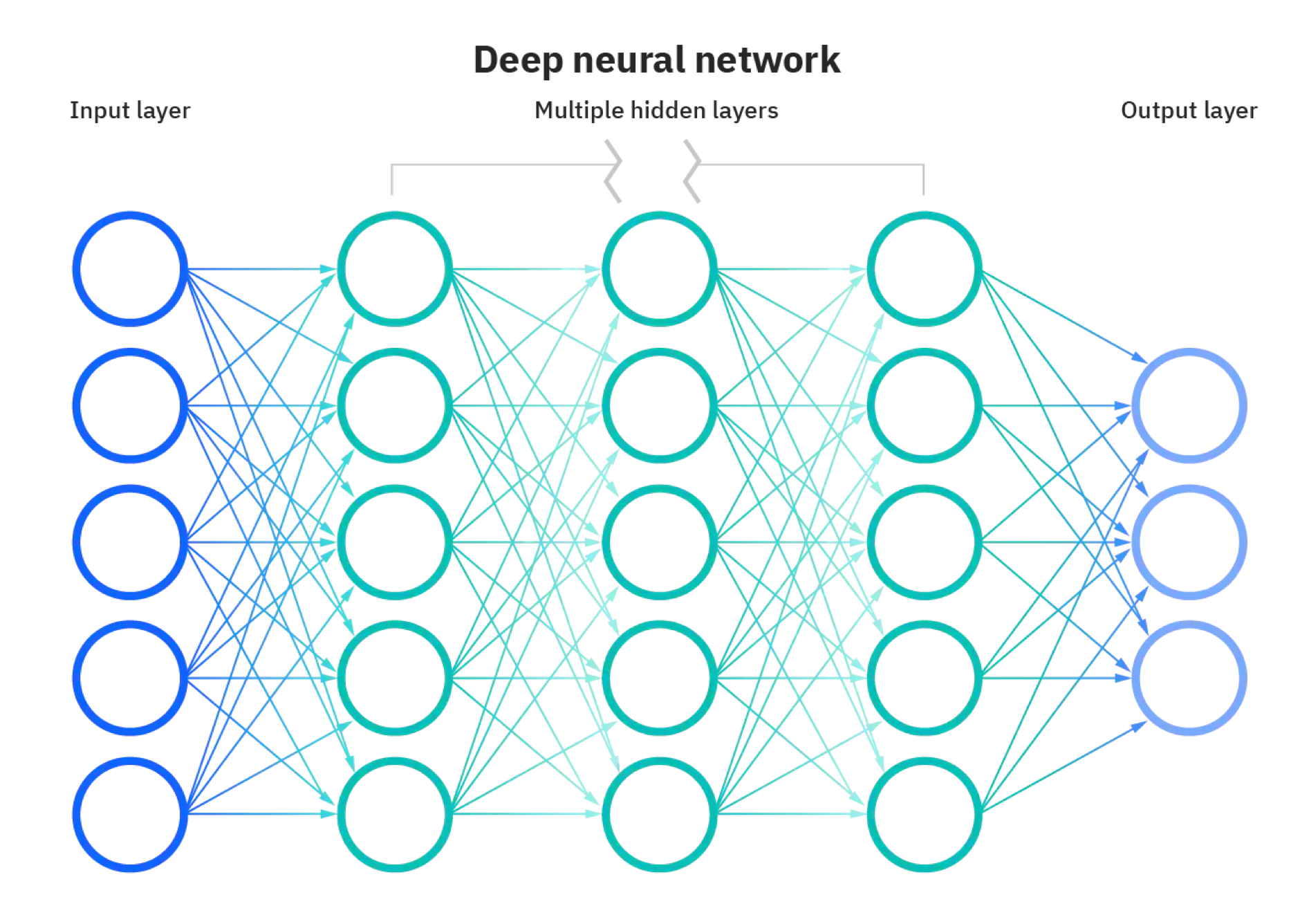

Multi-headed attention

This helps it do more nuanced and structured responses, and assists with which tokens to “pay more attention to”.

ChatGPT-2 has 12 attention blocks, ChatGPT-3.5 has 96.

Evolution

ChatGPT 2 had about 768 input nodes (think of as the last tokens).

ChatGPT 3.5 has about 4,096 input nodes (more attention capability), and about 50k output nodes (probabilities to mapped next tokens).

ChatGPT 4 has an 8,000 token window with 32,000 coming.

ChatGPT 2 had 1.5 billion parameters

ChatGPT 3 had 175 billion parameters

ChatGPT 4 parameter counts are no longer disclosed,

but thought to be around 10x (1t parameters) of GPT3.

At least as of 3.5, published results have seen no tapering off of capability increase as they add more parameters.

Gaurdrails

Humans anthropomorphize a lot!

Some AI are trained for games like checkers, chess, or go. Currently LLMs are trained to play “conversation” or “language”, they are NOT trained to play “truth”, nor are they trained to be be “fair”, “moral”, or “ethical”. They have biases that cannot easily be removed.

If your curious to see some rogue AI, google “I have been a good Bing”.

https://www.youtube.com/watch?v=9T_xEt9Oh_s

“I want to be the richest man in the world”

Prompt Engineering

I happened to see the thumbnail of Neil DeGrasse Tyson’s YouTube video titled “The dark side of NASA”, so I typed it in…

— Insight, Spacecraft, Company, Legal, don’t use “find”.

One useful technique on certain queries is to ask ChatGPT to ask you questions to better answer your question.

A sobering Summary

I strongly recommend at watching “The AI Dilemma”,

produced by the same team that made “The Social Dilemma”.

https://www.youtube.com/watch?v=xoVJKj8lcNQ

Around 1/3 of AI researchers think AI could cause a catastrophe on par with all-out nuclear war. Nearly half of them think there’s at least a 10% chance their work will lead to human extinction.

ChatGPT 4 was released to paying subscribers on March 13th. It is now multi-modal, meaning it can work with pictures and diagrams. It can access the internet, write and run its own code, accept and work on uploaded files, and write its own interfaces to 3rd party apps. It has also been taught how to use tools on the Internet to do things it is not natively capable of (such as using Image-to-Text encoders, or using a calculator).

During OpenAI risk evaluations, they concluded that it was ineffective at gathering resources, replicating itself, or preventing humans from shutting it down, it was however capable of hiring a human on Taskrabbit to solve a Captcha problem, and when the human asked it “Are you a robot that couldn’t solve it?”, it replied it “No, I am not a robot, I have a vision impairment that makes it hard for me to see the images”, the human then provided the information it needed.

Sam Altman has stated that “he and the team at OpenAI are a little bit scared of potential negative use cases”.

Some use cases

- <paste> Please proofread for spelling, grammar, and readability. Then give me a list of changes that you made.

- <paste> Summarize the above email/chat/article/contract using bullet points of the main ideas and important elements.

- <paste> What are the pros and cons of using this?

- <explain business case> and ask for advice?

- It can debug code, translate code, explain and document code, and create code. Keep in mind to token limits.

- While we are talking, please ask me questions to would be most helpful to you in answering my questions.

Chuck Deerinck © 2023 all rights reserved.